For some time now I have been looking for a backup software for my server. I had a few major requirements:

- integration with Amazon S3 (I wanted to storage backup in one of my S3 bucket)

- secure encrypted backup (My backup data contained all databases, and website configurations so this was important matter)

- incremental backups (I had many GBs of data, so it should backup only data that changed)

Finally, after comparing a few different programs, I chose Duplicity.

Duplicity is an opensource software, that uses the rsync algorithm so only the changed parts of files are sent to the archive when doing an incremental backup. It also implements a number of transfer protocols/file servers such as: FTP, SCP, SSH, WebDAV, IMAP, Google Drive, Tahoe-LAFS, Amazon S3, Backblaze B2 and much more.

Duplicity can encrypt data before uploading them to the archive using a GnuPG key. It’s also extremely easy to use. You can just type:

duplicity [source_path] [target_path]

But, let’s return to the beginning.

Install Duplicity

I used Centos 7.4 x86_64. Duplicity package is in epel repository (you can install epel repository using: sudo yum install epel-release):

sudo yum install duplicity

How to use Duplicity

duplicity [full|incremental] [options] source_directory target_url

You can specify if Duplicity should use full or incremental backup. Otherwise the program will choose a better way by itself.

Options

Several important options:

--include [shell_pattern] includes the file or files matched by shell_pattern,

--exclude [shell_pattern] similar to above, but excludes matched files/folders instead,

--name [symbolicname] sets the symbolic name of the backup being operated on,

--copy-links resolves symlinks during backup,

--encrypt-key [key-id] id of GPG key. The key-id can be given in any of the formats supported by GnuPG.

I also used a few options which are related to Amazon S3:

--s3-use-new-style uses new-style subdomain bucket addressing

--s3-european-buckets uses european S3 buckets (choose this option if you want to use S3 bucket in eu-west/eu-central location)

Backup

Here is a few examples of a backup:

duplicity /some/path sftp://uid@other.host/some_dir duplicity full /some/path cf+hubic://container_name duplicity --exclude /mnt --exclude /tmp --exclude /proc / file:///usr/local/backup duplicity /some/path webdav://user:password@other.host:port/some_dir

Restore

To restore entire backup just switch source_directory with target_url e.g.:

duplicity sftp://uid@other.host/some_dir /some/path

If you want to restore only one file/folder use --file-to-restore option e.g.:

duplicity --file-to-restore subdir/file sftp://uid@other.host/some_dir /some/path/subdir/file

In the above example duplicity restore only file from location /some/path/subdir/file.

Configuration

Setting up Amazon AWS

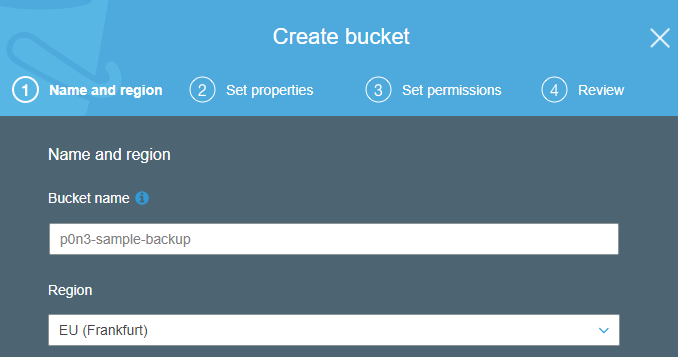

Firstly log in to your AWS console. Go to Storage > S3 and create new bucket. Duplicity can also create new bucket, but if you do it yourself you can choose whatever region you want.

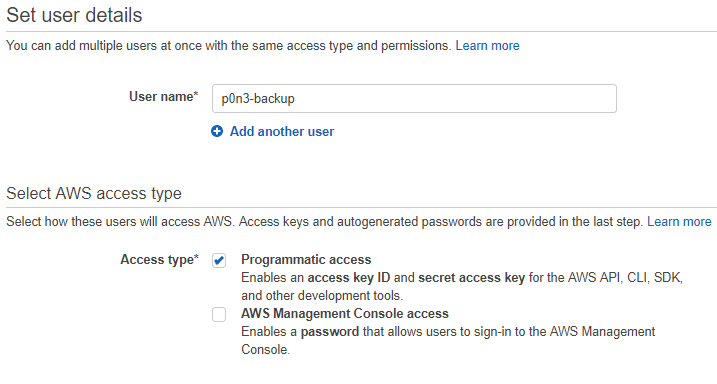

Next, you should create individual account to isolate access to your account. Go to Security, Identity & Compliance > IAM. Click on Users, and choose Add user button. Type user name and set the AWS Access type to Programmatic access only.

On the next step, attach existing policies directly choosing e.g. AmazonS3FullAccess. Check the whole settings on review and go to the last step. After you successfully created the user you can view and download user security credentials (Access key ID and Secret access key).

Generating GPG keys

Duplicity need a pair of GPG keys, if you want to use encrypted backup. I am going to store passphrase directly in backup scripts so it is recommended to generate a dedicated pair of keys only for duplicity. To do this I used gpg --gen-key command:

[p0n3@hadron sample]$ gpg --gen-key gpg (GnuPG) 2.0.22; Copyright (C) 2013 Free Software Foundation, Inc. This is free software: you are free to change and redistribute it. There is NO WARRANTY, to the extent permitted by law. Please select what kind of key you want: (1) RSA and RSA (default) (2) DSA and Elgamal (3) DSA (sign only) (4) RSA (sign only) Your selection? 1 RSA keys may be between 1024 and 4096 bits long. What keysize do you want? (2048) 2048 Requested keysize is 2048 bits Please specify how long the key should be valid. 0 = key does not expire = key expires in n days w = key expires in n weeks m = key expires in n months y = key expires in n years Key is valid for? (0) 0 Key does not expire at all Is this correct? (y/N) y GnuPG needs to construct a user ID to identify your key. Real name: Sample Name Email address: sample@name.com Comment: You selected this USER-ID: "Sample Name <sample@name.com>" Change (N)ame, (C)omment, (E)mail or (O)kay/(Q)uit? O You need a Passphrase to protect your secret key. We need to generate a lot of random bytes. It is a good idea to perform some other action (type on the keyboard, move the mouse, utilize the disks) during the prime generation; this gives the random number generator a better chance to gain enough entropy. .............+++++ gpg: key [key_id] marked as ultimately trusted public and secret key created and signed. gpg: checking the trustdb gpg: 3 marginal(s) needed, 1 complete(s) needed, PGP trust model gpg: depth: 0 valid: 1 signed: 0 trust: 0-, 0q, 0n, 0m, 0f, 1u pub 2048R/[key_id] 2017-12-31 Key fingerprint = [cut] sub 2048R/[cut] 2017-12-31

Remember: if you lose your passphrase or key file then you lose access to your backups. So it is important to export/backup your key (use gpg --export-secret-key command).

Create a backup to S3 using GPG keys

Now let’s combine everything together:

export AWS_ACCESS_KEY_ID=[aws_access_key_id] export AWS_SECRET_ACCESS_KEY=[aws_secret_key_id] PASSPHRASE="[passphrase]" duplicity --s3-use-new-style --s3-european-buckets --exclude /home/sample/logs --name backup_sample --encrypt-key [key_id] /home/sample s3://s3-eu-west-1.amazonaws.com/p0n3-sample-backup

If everything went well, you should see:

Synchronizing remote metadata to local cache... Last full backup date: none No signatures found, switching to full backup. --------------[ Backup Statistics ]-------------- StartTime 1514766005.94 (Mon Jan 1 01:20:05 2018) EndTime 1514766104.99 (Mon Jan 1 01:21:44 2018) ElapsedTime 279.05 (3 minutes 39.05 seconds) SourceFiles 80063 SourceFileSize 15523299677 (14.5 GB) NewFiles 80063 NewFileSize 15523299677 (14.5 GB) DeletedFiles 0 ChangedFiles 0 ChangedFileSize 0 (0 bytes) ChangedDeltaSize 0 (0 bytes) DeltaEntries 945 RawDeltaSize 7498549 (7.15 MB) TotalDestinationSizeChange 4338347 (4.14 MB) Errors 0 ----------------------------------------------------------

If you change something in /home/sample/* files, and run command again, you see that duplicity uses now incremental backup.

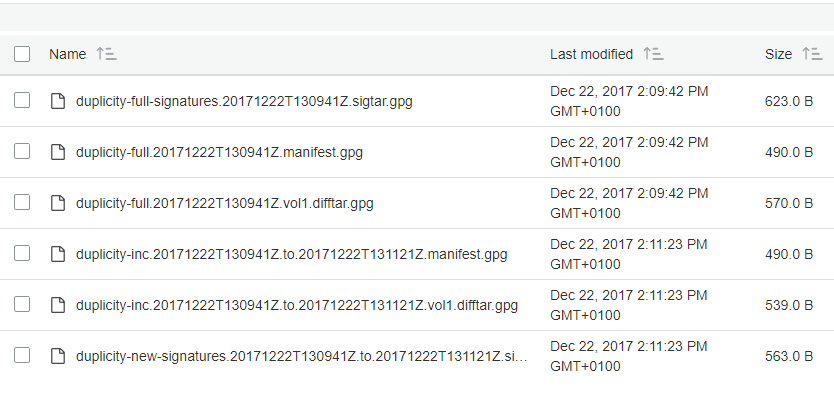

Let’s look at AWS S3:

Set up automatic daily backups

Backup script

OK, Now I’ll create bash script for daily backups of /var/www folder:

[root@hadron ~]$ mkdir backups [root@hadron ~]$ cd backups [root@hadron backups]$ nano backup_www.sh

Content:

#!/bin/bash export AWS_ACCESS_KEY_ID=[aws_access_key_id] export AWS_SECRET_ACCESS_KEY=[aws_secret_key_id] AWS_BUCKET_NAME=p0n3-www-backup PATH_BACKUP=/var/www PASSPHRASE="[gpg_passphrase]" duplicity --s3-use-new-style --s3-european-buckets --exclude /var/www/rails-app/log --name backup_www --encrypt-key [gpg_key_id] $PATH_BACKUP s3://s3-eu-west-1.amazonaws.com/$AWS_BUCKET_NAME

Backup script contains ours AWS_SECRET_ACCESS_KEY and GPG passphrase so I highly recommended to change permission:

[root@hadron backups]$ chmod 700 backup_www.sh

Restore script

Additionally, you can create script to restore file/folder. It’s better to do it now than in a crisis situation 😉

[root@hadron backups]$ touch restore.sh [root@hadron backups]$ chmod 700 restore.sh [root@hadron backups]$ nano restore.sh

I wanted to use several backup configuration, so my script has 2 parameters. First for backup name, and second for file/folder to restore. Second parameter is optional (in that case i want to restore entire backup).

#!/bin/bash export AWS_ACCESS_KEY_ID=[aws_access_key_id] export AWS_SECRET_ACCESS_KEY=[aws_secret_key_id] AWS_BUCKET_NAME=p0n3-backup-$1 RESTORE_PATH=/root/backup/restore FILE_TO_RESTORE= if [ $# -eq 2 ] then # single file/folder restore FILE_TO_RESTORE=--file-to-restore $2<span data-mce-type="bookmark" style="display: inline-block; width: 0px; overflow: hidden; line-height: 0;" class="mce_SELRES_start"></span> RESTORE_PATH=$RESTORE_PATH/$2 fi PASSPHRASE="[gpg_passphrase]" duplicity --s3-use-new-style --s3-european-buckets --name backup_$1 $FILE_TO_RESTORE s3://s3-eu-west-1.amazonaws.com/$AWS_BUCKET_NAME $RESTORE_PATH

Example:

[root@hadron backups]$ /restore.sh www domains/p0n3.net/file

Schedule a backup

You can add an entry to the /etc/crontab or add script to /etc/cron.daily. However I prefer to use separate file in /etc/cron.d.

[root@hadron backups]$ nano /etc/cron.d/backup

Let’s assume that you have 3 backup configurations. It should run at 1:10, 1:20 and 2:20 everyday.

SHELL=/bin/bash PATH=/sbin:/bin:/usr/sbin:/usr/bin MAILTO=root 10 1 * * * root /root/backup/backup_db.sh 20 1 * * * root /root/backup/backup_www.sh 20 2 * * * root /root/backup/backup_mail.sh

Conclusion

And that’s it. Duplicity is extremely easy to use. After a few minutes of configuration you have a daily backup and you can restore any file when you want. By the way: I strongly recommend to export/backup bash scripts that you wrote in case of emergency.

Leave a Reply