Second time in a row, I’ve joined the hackathon during Alfresco DevCon 2019 – this time in Edinburgh. I decided to make an integration between Alexa and Alfresco. For several weeks I have been using Amazon’s voice assistant (Alexa) at home. I mainly use it to control lights, music or timers. Smart speakers are more useful than I thought, that’s why i wanted to prepare an AMP module which will allow developers to easily create a new Alexa’s skill integrated with Alfresco.

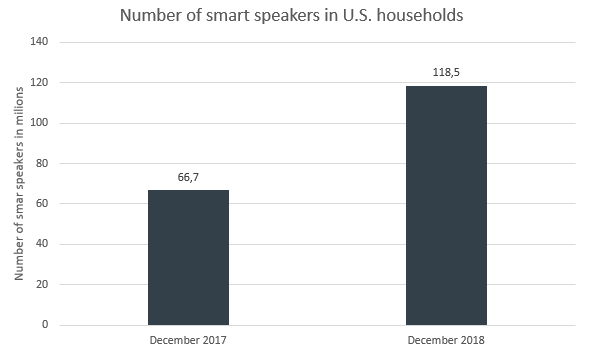

Numbers growing fast

I had no idea how fast the numbers of smart speakers users grows, until I saw the last report from the Edison Research company.

Around 53 million Americans own a smart speaker like Amazon Alexa or Google Home. Only last year the number of Smart Speakers in U.S. grown by 78%. There is a huge potential here and maybe I shouldn’t be surprised. These devices are becoming cheaper, have better voice recognition and every day they have more and more available skills.

Beginning

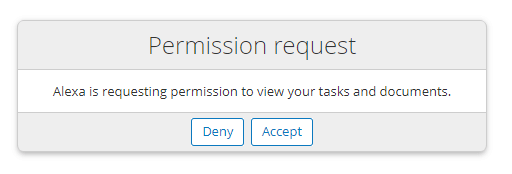

I started working on the project during the weekend before the conference. Amazon provides official Java SDK for Alexa integration, so the basic integration was easy to make. First thing I had to do was a mechanism to link account between Alexa’s skill and Alresco Share. This was one of the most important issues, because skill’s implementation must be run under the user’s context. You should link your accounts right after enabling Alfresco skill.

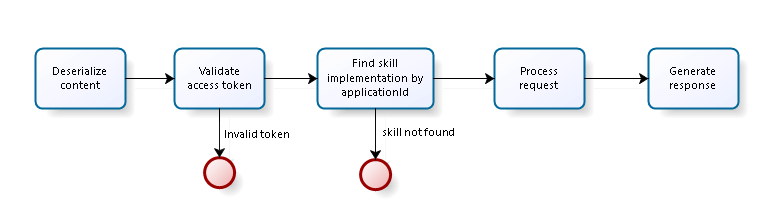

Next I had to create a webscript that will be working as an endpoint for the skill. It should authorize the user and execute a matching skill implementation for the request.

How does it work?

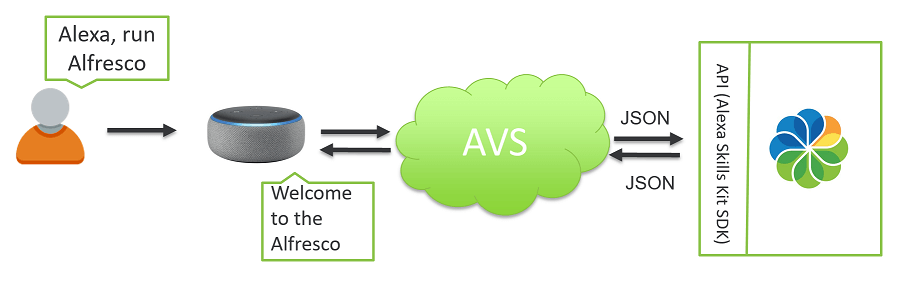

- The user’s speech is streamed to the Amazon Voice Service.

- Amazon Voice Service recognizes the skill and sends a JSON request to the ACS endpoint webscript.

- ACS webscript proceses request and sends a JSON response.

- The Alexa-enabled device speaks the response back to the user.

Flow of alexa skill request

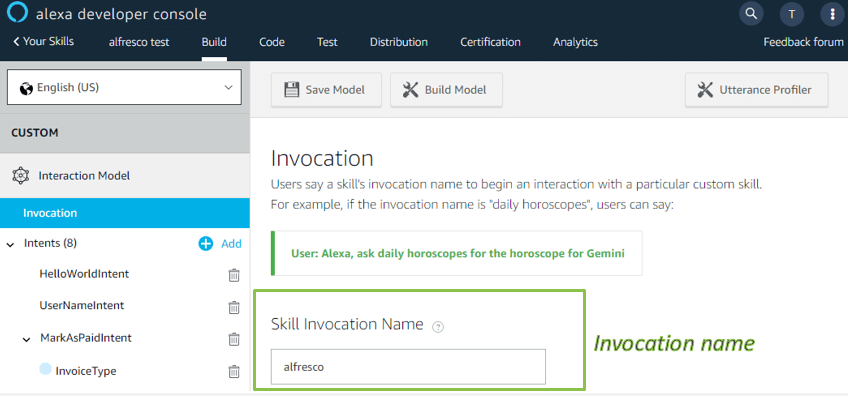

Skill configuration

If you want to try it, first, you need an account on the Alexa developer console. We use it to manage a configuration of our skill.

To create a new skill:

- Open the developer console.

- Click Your Alexa Consoles and then click Skills.

- Click Create Skill.

- Enter the skill name and default language.

- Select the Custom model.

- Click Create skill.

Skill components

Now, you should configure it. Firstly we have an invocation name which identifies the skill. The user includes this name when initiating a conversation with your skill.

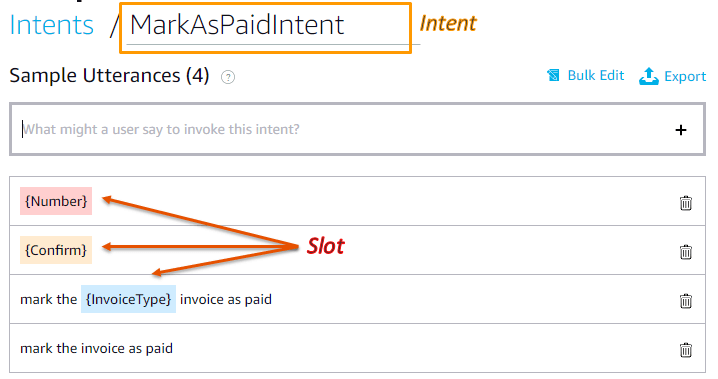

Each skill could have many intents. An intent represents an action that users can invoke in your skill. Intents could have further arguments called slots which represent variable information.

Sample utterances is a set of the words users can say to invoke those intents.

Endpoint configuration

You need to specify the endpoint for your skill. Choose HTTPS as an Endpoint type and type an url:

https://[your-domain]/alfresco/service/api/alexa/endpoint

Account Linking

In this section enable Do you allow users to create an account or link to an existing account with you? option, select Implicit Grant, type:

https://[your-domain]/share/page/alexa-authentication

in Authorization URI, paste your skill id (it started with something like amzn1.ask.skill…) into Client ID field. Use 2 scopes: documents, tasks, and don’t forget to put your domain in a Domain List.

Read Amazon documentation for more details.

Alfresco implementation

Your class should implement one of the interfaces from the AMP:

AlfrescoVoiceSimpleIntent or AlfrescoVoiceSessionIntent. Bellow you can find comparing both of the interfaces:

| AlfrescoVoiceSimpleIntent | AlfrescoVoiceSessionIntent | |

| user context | + | + |

| text/voice response | + | + |

| session attributes | – | + |

| slots | – | + |

| session control | – | + |

So, if your intent doesn’t need session parameters or intent arguments (slots) then you could use AlfrescoVoiceSimpleIntent. Otherwise use AlfrescoVoiceSessionIntent.

HelloWorld example:

package org.alfresco.alexa.sample;

import org.alfresco.alexa.AlfrescoVoiceSimpleIntent;

import org.alfresco.alexa.AlfrescoVoiceSkill;

public class HelloSkill extends AlfrescoVoiceSkill implements AlfrescoVoiceSimpleIntent {

@Override

protected void registerIntents() {

this.registerSimpleIntent("HelloWorldIntent", this);

}

@Override

public String getResponse() {

return "Hello Alfresco!";

}

}

Bean configuration

HelloWorld bean configuration:

<bean id="alexa.sample.hello" class="org.alfresco.alexa.sample.HelloSkill" parent="alexa.skill">

<property name="skillName">

<value>Hello</value>

</property>

<property name="skillId">

<value>amzn1.ask.skill.6aac1bad-4248-4c3f-9575-5d2064eda0ce</value>

</property>

<property name="helpText">

<value>Say hello</value>

</property>

<property name="welcomeText">

<value>Welcome to the Alfresco</value>

</property>

</bean>

- skillName – title of the skill showed on screen-enabled devices

- skillId – skill id from the Alexa developer console, you can find it here – just click View Skill ID

- helpText – text to say when user ask for help for this skill. If you want to change this action you can override getHelpIntentHandler method

- welcomeText – text to say when skills is invoked. If you want to change this action you can override getLaunchIntentHandler method

Hackathon day

The same as last year every team leader said couple words about his project at the beginning of the hackathon. It was directed to those who didn’t pick anything before the conference, but they want to participate in one of the projects. Lucky one person – Uros from Serbia found my project interested and joined my team. He helped me with bugs fixing and testing in one of usage example.

Presentation

You can see my presentation here (Keynotes & Panels > Hackathon showcase > from 20:40). Slides can be found here.

Download

You can download the project source from GitHub.

To-do list

What can be added to the project?

- support multi-languages (label-id in bean configuration instead of label)

- notification ability – Alexa’s notification feature has not been made public yet, but I used Notify Me skill during presentation instead.

- keycloak authorization

- better project configuration for multiple intents in one skill

Leave a Reply